Meet the Computer 13,000× Faster Than Today’s Machines — Google’s Willow Chip Runs OTOC Quantum Echoes

Quantum Echoes: When Data Starts Talking Back in Time

When your data starts echoing across time — you know it’s gone quantum!

In the race toward the future of computing, one milestone just changed everything — a verifiable quantum advantage.

For the first time in history, a quantum computer has successfully run a verifiable algorithm faster than even the most powerful classical supercomputers — a staggering 13,000× speedup.

That algorithm is called the Out-of-Order Time Correlator (OTOC) — or as Google’s Quantum AI team affectionately calls it, Quantum Echoes.

This isn’t just another lab experiment; it’s a leap that brings us closer to quantum computers solving real-world problems in chemistry, materials science, and medicine.

What Exactly Is the Out-of-Order Time Correlator (OTOC)?

At its core, the OTOC is a way to measure how information spreads and becomes scrambled inside a quantum system.

It tells us how a tiny disturbance — say, tweaking one qubit — ripples across an entire quantum network over time.

In classical systems, information spreads linearly and predictably. But in quantum systems, information can spread, interfere, and entangle in ways that are beautifully chaotic — and incredibly powerful.

So, how does OTOC measure that?

Think of the process as a quantum echo experiment:

If the echo fades quickly, it means the information spread widely — indicating quantum chaos.

If the echo remains strong, the system maintained coherence — a key trait for quantum stability.

That’s why this algorithm is called “Out-of-Order Time Correlator” — it measures correlations between events that occur in non-sequential time order (some operations happen forward, some backward).

Why This Breakthrough Matters

Until now, these complex quantum behaviors were theoretically understood but practically unreachable.

Simulating them on classical supercomputers would take thousands of years due to the exponential data involved.

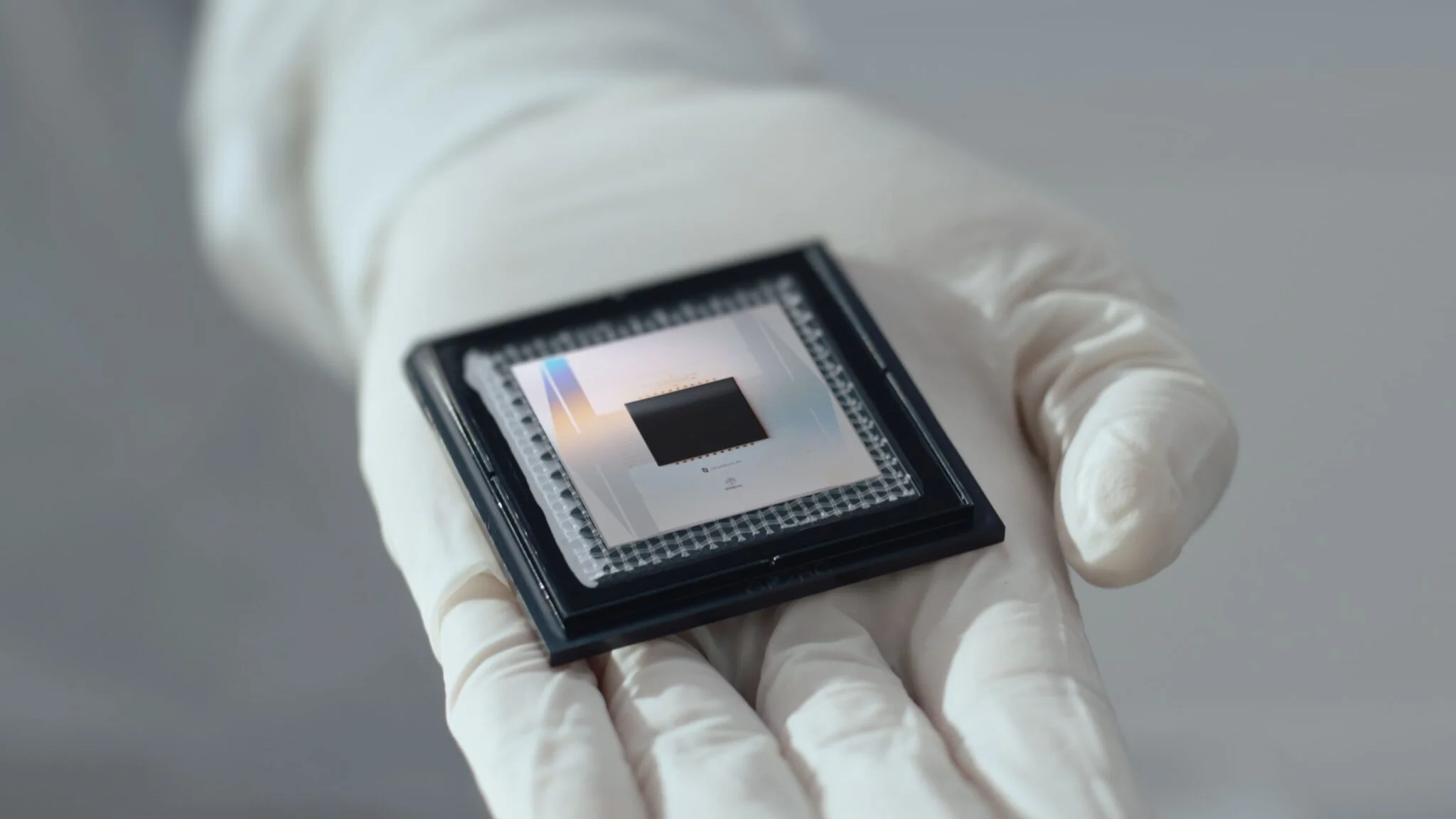

But with Google’s Willow quantum chip — equipped with 105 qubits and incredibly low error rates — Quantum Echoes achieved something historic:

For the first time, scientists didn’t just observe quantum behavior — they verified it computationally.

Classical Data vs Quantum Data — The Foundation Behind It All

To appreciate why this matters, let’s step back for a second.

What makes quantum data so powerful — and so different — from classical data?

Aspect | Classical Data | Quantum Data |

Basic Unit | Bit (0 or 1) | Qubit (0 and 1 simultaneously) |

Representation | Deterministic | Probabilistic (wave function) |

Storage Growth | Linear | Exponential — n qubits = 2ⁿ states |

Correlation | Independent bits | Entangled qubits |

Measurement | Doesn’t alter value | Collapses wave function |

Copying | Can clone | Cannot clone (No-Cloning Theorem) |

Error Handling | Binary redundancy | Quantum error correction |

Operation Type | Logical gates (AND, OR, NOT) | Quantum gates (Hadamard, CNOT, etc.) |

Simple Analogy: Light Switches vs Spinning Coins

This ability to exist in superposition, combined with entanglement, gives quantum computers exponential parallelism — processing countless states at once.

That’s the magic of quantum data.

Why Quantum Echoes Needs Quantum Data

The Quantum Echoes algorithm depends on tracking how quantum data (information encoded in qubit states) moves, interferes, and entangles.

A classical computer can’t model this efficiently because classical bits simply don’t behave that way.

Quantum data:

That’s why simulating OTOC on a supercomputer is nearly impossible — the number of possible quantum states grows exponentially.

Quantum hardware like the Willow chip does this natively and verifiably.

Real-World Implications

Quantum Echoes is more than just a physics experiment — it’s a glimpse into what’s coming:

In fact, using Quantum Echoes, Google and UC Berkeley modeled molecules with 15 and 28 atoms, producing results that matched traditional NMR — but with greater precision and less time.

The Out-of-Order Time Correlator (OTOC) isn’t just a milestone for quantum computing — it’s a mirror showing us how information behaves in nature itself.

And for the first time, we have the tools to measure it, verify it, and learn from it — faster than any classical machine ever could.We’re not just processing data anymore. We’re listening to the echoes of the universe — and finally starting to understand them.